[Back-End & Database]

By Arun Kumar Fri, Jun 5, 2020

Headless Commerce is that separation which makes back end and front end two different identities in an e-commerce application. It presents various product catalogues without any traditional constraints of the eCommerce platform. In addition, you have full control over the presentation and aesthetics of the products.

Developers decouple the “head” or front-end template from the back-end data that gives them an upper hand to modify and optimize the style of various product categories.

[Django Python]

By Aayush Saini Wed, Mar 11, 2020

In this blog, we will learn about the use of the Polymorphic model of Django.

Polymorphism: Polymorphism means the same function name (but different signatures) being uses for different types.

Example:

print(len("geeks")) output-:5

print(len([10, 20, 30])) output-:3

In the above example, len is a function that is used for different types. Polymorphic model is also based on polymorphism.

I did use the polymorphic model in many projects in excellence technologies.

[Back-End & Database Uncategorized]

By Aayush Saini Wed, Mar 11, 2020

What is Web Scraping? Web Scraping is the process of data extraction from various websites.

DIFFERENT LIBRARY/FRAMEWORK FOR SCRAPING:

Scrapy:- If you are dealing with complex Scraping operation that requires enormous speed and low power consumption, then **Scrapy **would be a great choice. Beautiful Soup:- If you’re new to programming and want to work with web scraping projects, you should go for**_ Beautiful Soup_**. You can easily learn it and able to perform the operations very quickly, up to a certain level of complexity.

[Back-End & Database Python]

By Aayush Saini Wed, Mar 11, 2020

In this blog, we try something interesting. We can do one thing suppose we have a full working website, and we want to use website login API in our API. So for this, we use python library requests, and we will see how this works. So Let’s start.

Problem: So the problem is at this stage we don’t know where we will get API URL, we don’t know which URL will work for login.

[Back-End & Database Python]

By Aayush Saini Wed, Feb 12, 2020

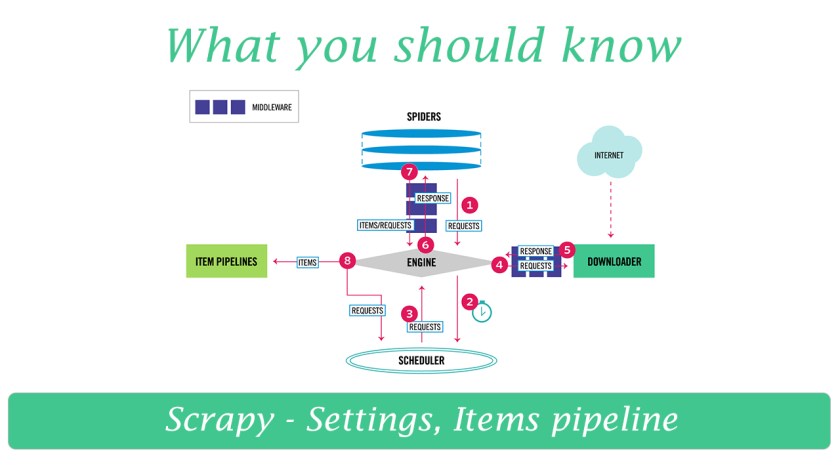

Scrapy Settings:

If you’re looking to uniquely customize your scraper, then you’ll want to learn the tips and tricks of how to do this without a hassle. Using Scrapy settings, you can conveniently customize the crawling settings of your crawler. That’s not all, scrapy also allow you to customize other items like core mechanism, pipelines, and spiders. You’ll typically find a settings.py file in your project directory that allows you to easily customize your scraper’s settings.

[Back-End & Database Django Python]

By Aishwary Kaul Tue, Feb 11, 2020

Custom commands or what many have come to know as management commands or utility commands are one of the essential features that are provided by python frameworks. These commands are quite useful when performing tasks that requires a lot of different methods to complete the task.

[Back-End & Database Python]

By Aayush Saini Tue, Feb 11, 2020

Concurrency in Python is no doubt a complex topic and one that is hard to understand. More so, it also doesn’t help that there are multiple ways to produce concurrent programs. For a lot of people, they have to deal with lots of thoughts including asking questions like,

Should I spin up multiple threads?

Use multiple processes?

Use asynchronous programming?

Here is the thing, you should use async IO when you can and use threading when you must.

[Django Python]

By Aishwary Kaul Mon, Sep 30, 2019

so in this blog we will see how to implement fcm or Firebase Cloud Messaging services.

[Back-End & Database Django Python]

By manish Thu, Jun 27, 2019

Till now we are seen basics of models, views, serializes everything. But the most important aspect of any project is the database operations.

In this blog post we will some db operations which were used a in a live project and get more in-depth knowledge of things.

[Back-End & Database Django Python]

By manish Sat, Jun 22, 2019

In this part we will look how to customize admin interface

[Back-End & Database Django Python]

By manish Fri, Jun 21, 2019

In this blog post we will see about groups/permissions.

[Django Python]

By manish Fri, Jun 21, 2019

In this part we will see about authentication for api

[Back-End & Database Django Python]

By manish Mon, Jun 17, 2019

This is one the exciting features of DRF let’s see what it is.

[Back-End & Database Django Python]

By manish Mon, Jun 17, 2019

In this blog post we will see more details on View Class and also router.

[Back-End & Database Django Python]

By manish Mon, Jun 17, 2019

In this part we will see about “Views” with DRF

[Back-End & Database Django Python]

By manish Fri, Jun 14, 2019

Let’s get started with django rest api framework as we saw in the last blog.

[Back-End & Database Django Python]

By manish Thu, Apr 25, 2019

In this post we will mainly see about django model and database operations

[Back-End & Database Django]

By manish Tue, Apr 2, 2019

In previous blog we saw just basics of django and should have understood basics of apps, routes, model at-least theoretically.

[Back-End & Database Django]

By manish Tue, Apr 2, 2019

In this tutorial we will see how to use django in our projects and mainly we will focus this towards REST API’s

[Back-End & Database Python]

By Aayush Saini Thu, Mar 28, 2019

Introduction: Requests is a Python module that you can use to send all kinds of HTTP requests. It is an easy-to-use library with a lot of features ranging from passing parameters in URLs to sending custom headers and SSL Verification.

we can use requests library very easily like this.

import requests

req = requests.get('https://www.excellencetechnologies.in/')

Now let’s install the requests library.

How to Install Requests: You can make use of pip, easy_install, or tarball package managers.